1. Introduction

This article is the third in a series of articles which focus on the topic of AI agents within actuarial. Article 1 of this series surveyed the machinery behind that acceleration. Article 2 mapped the knock-on effects for modelling.

This third article focuses on the impact on actuarial careers. For decades, technology has altered the tools of the actuarial craft, from the ubiquitous spreadsheet to the promise of low-code platforms. Yet these tools only changed how actuaries calculated; AI agents are poised to automate the calculation itself. As the entire modelling process becomes the domain of the machine, we must ask: what is the human's purpose when their core function is commoditised?

2. The Velocity of Change: Extrapolating from AI 2027 forecast

Recent analyses from some AI forecasters offer a significantly accelerated timeline for technological disruption. The “AI 2027” scenario (Kokotajlo et al., 2025) puts forward that the advent of superhuman agents will precipitate major economic and geopolitical upheaval over the next few years. The authors support this accelerated forecast by referencing documented surges in AI capability, specifically the transition from assistive LLM 'copilots' to autonomous agents which are capable of performing work that would normally take a human hours or days to perform. The report hypothesises a rapid diffusion of these systems in a time period which gives little time for professions and regulatory frameworks to adapt.

By contrast, insurance and actuarial work operate within a tradition where feedback loops are measured in years, where regulatory consultations unfold over decades, and where validation and review are embedded at every junction. Major changes, consider Solvency II’s gestation, from the earliest QIS exercises of 2006 to practical implementation in 2016, are shaped as much by debate and control as technological feasibility. While truly useful agentic systems might emerge in the late 2020s within the frontier labs, there will be some time before insurance businesses start to deploy them in their operations, and some time after that before the presence of these systems goes from novel to business as usual. This article looks to 2035: a time frame intended to be some time past the initial upheaval, representing a point where actuarial agent driven workflows have become embedded into business-as-usual tasks. This happens to be the same year the movie I, Robot was set, fittingly.

While this lag might frustrate technophiles, it preserves the conservatism of the sector. As we follow this curve, the question is not whether actuarial roles will change, but how thoroughly, at what pace, and towards which ends. The incentive will be large; while AI may not yet beat a top-tier expert, it will become demonstrably better, faster, and cheaper for most routine tasks. This reality will create a powerful economic driver, not for simple cost reduction, but for a strategic reallocation of labour and capital within organisations.

3. The Evolution of Actuarial Work: From Calculation to Curation

Creating a cashflow projection historically involved translating product specifications into formulas, reconciling inputs, testing edge cases, applying stress scenarios, creating new validation controls, and finally, producing a report. In the 2030s, AI agents will possess the breadth of knowledge and reasoning skills necessary to perform actuarial tasks as accurately as a human, provided they receive the same level of instruction and documentation.

What work remains, and indeed grows in salience, are those tasks that can be performed with straightforward guidance. Human judgment will not be a single skill but a hierarchy of them. While agents may become adept at suggesting data-driven assumptions, the actuary's value will lie in the meta-level judgment: assessing the appropriateness of the model itself, overriding assumptions based on contextual knowledge, and synthesising the “why” behind the results. In this evolving landscape, the actuary's contributions shift from routine computation to higher-order responsibilities, particularly in areas such as:

Interpretation and Exception Handling: Agents can surface outputs, but do not (yet) excel at recognising when a change in assumptions, while statistically innocuous, signals a business paradigm shift.

Curation and Validation: While routine reconciling becomes a machine task, the review of model changes, especially in uncharted lines or in response to emerging risks (climate, cyber, sociopolitical), requires nuanced scepticism.

Governance and Assurance: As automation advances, actuaries play a vital role in upholding transparency and regulatory compliance. Actuaries will need to review the documentation and reasoning of these AI systems to ensure that any actuarial judgement they provide is sound.

Simply attributing a decision to "the machine" will never suffice. Firms will need to invest in robust control frameworks: comprehensive audit trails, versioned prompts, role-based permissions, detailed change logs, and systems that ensure outputs from these agents are fully traceable and reproducible. This evolution will add a new dimension to actuarial practice, where the real value lies not just in the models themselves, but in the infrastructure that assures their validity, transparency, and alignment with business and regulatory standards.

4. Career Archetypes in the 2030s

In this section, we explore how common roles within the actuarial profession are likely to evolve as automation reduces the need for many routine tasks that currently make up much of actuaries’ daily work.

4.1. The Analyst: From Doer to Reviewer

Historically, “junior” actuaries spent long weeks wrangling data and reworking spreadsheets, learning by doing. By 2035, their onboarding will be very different at advanced firms. On day one, they are paired with a general-purpose agent, with knowledge of industry-standard actuarial workflows and the firm’s proprietary models. The analyst’s job is not to build calculations from first principles, but to prompt and interrogate for inconsistencies.

A key challenge for the profession, however, will be ensuring this new 'learn-by-review' paradigm can still build the foundational intuition that was once the natural byproduct of entry-level procedural tasks. Recent research from the MIT Media Lab suggests that heavy reliance on AI for cognitive tasks can reduce user engagement and retention. In a controlled experiment, students who used a large language model assistant to help write essays demonstrated less neural and behavioral engagement with their work, and, months later, performed less effectively when asked to complete similar tasks without AI support. These findings highlight the risk that overdependence on AI could hinder skill development and ownership of work, reinforcing the importance of designing actuarial training that actively cultivates technical and critical thinking abilities, not just reviewing agent outputs, but practicing problem-solving and debugging firsthand (Kosmyna et al., 2025).

Some may argue that as technology advances, traditional debugging skills could become as obsolete as manual long division in the era of calculators. From this perspective, actuarial training should not simply preserve legacy skills, but instead anticipate the competencies actuaries will need in the future. Developing proficiency in prompting, interrogating, and critically assessing model outputs may warrant a fundamentally different approach to early-career education. For example, formal training could increasingly rely on realistic business simulations, where junior actuaries role-play complex scenarios, practicing not just technical review but also high-level critical thinking and judgment. In this way, the focus shifts from mechanical skill-building to nurturing the analytical mindset required to oversee, question, and trust the results produced by AI systems.

4.2. The Manager: Leading hybrid AI Teams

The classic actuarial “manager” once coordinated teams, allocated tasks, and collated it all into a single deliverable. When leadership sees a machine deliver useful output faster and more accurately than a human team, the existing structures and expectations will change. Within the 2030s, that manager will oversee both human analysts and a variety of agents specialised in coding, documentation, results quality assurance, or regulatory interpretation.

The role of a manager will become more of an orchestrator, scheduling agent tasks, triaging issues surfaced by automated audit logs, ensuring hand-offs are smooth between human and machine, and, above all, synthesising outputs into a narrative and action plan for senior leadership.

The shift from human micro-management to AI-human orchestration leans into soft skills: risk communication, contextual awareness (e.g., understanding how systemic shifts, such as the adoption of new regulatory frameworks or market disruptions, impact model reliability), and the ability to frame uncertainties for an audience.

4.3. The Senior Risk Leader: Changing Objectives

Chief Actuaries, Chief Risk Officers and regulated role holders face a different challenge. While AI can draft reports and run complex models, regulatory accountability remains fundamentally human. The signature on that regulatory filing belongs to a person, not an algorithm.

However, the capacity freed up by AI automation allows senior actuaries to engage more deeply with strategy and opens up a different set of questions:

What risks are AI agents not capturing in their analysis?

What ethical principles should guide our AI adoption?

How much should we delegate or trust our AI-driven processes?

The value future leaders will add to the process will lie in their ability to select which scenarios warrant escalation, what constitutes “model risk” as opposed to “real risk,” and negotiating with boards and regulators to set acceptable boundaries for both.

4.4. Division of Labour: Agent-Ready vs Human-Only Tasks

It is worth grounding the discussion in the patterns of real-world work that underpin each role. As agents become capable of handling every well-defined, repeatable task in the actuarial toolkit, the boundary between automated execution and essential human contribution will be redrawn. We summarise how selected tasks may be allocated between human actuaries and AI agents in Table 4.4 below.

The above is just a set of possible distributions of how work may be allocated between humans and AI agents. The distributions may change over time as AI becomes more sophisticated or regulatory pressures require more explicit human oversight. However, relative to the current state of AI today, it is clear that many tasks once considered the preserve of human expertise will steadily become within the purview of sufficiently capable agentic systems.

5. Future-Proofing Actuaries

5.1. AI Literacy

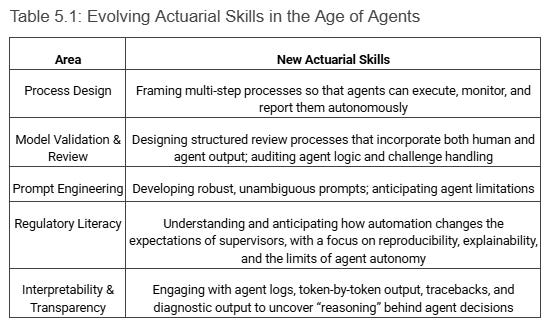

The transition to agent-augmented practice means a recasting of core actuarial skills as knowledge of financial and statistical concepts grow less differentiating. In Table 5.1, we summarise what new skills might be required of the typical actuary in 2035.

5.2. AI Governance

Institutional reliance on AI magnifies the impact of small errors and latent biases. The following will be essential:

Embedding clear data lineage from source to output.

Enforcing policies that require agents to flag, document, and escalate ambiguity or outlier results rather than smoothing them away.

Auditable procedures for challenge, review, and deviation management, with clear records of who (human or machine) proposed, reviewed, and accepted each change.

On the ethical side, the actuarial code of conduct will need to extend to agent-guided decisions, mandating both statistical checks for algorithmic bias and peer review for model fairness. Reserving opinions and pricing justifications will increasingly need to include attestations of the rigorous processes undertaken to test for, mitigate, and document potential algorithmic biases, while transparently disclosing the inherent limitations and residual uncertainties in certifying any complex model as ‘fair’.

5.3. Institutional Responses to AI

The transition to agent automation not only transforms the daily work of actuaries; it also demands a fundamental reconfiguration of the profession’s institutional foundations. Key stakeholders, including training providers, employers, and regulators, will need to reassess each of their roles and approaches to preserve relevance, rigor, and credibility in this new landscape. Table 5.3 summarises their potential responses.

6. Practical Strategies for the Individual

The “AI 2027” scenario sketches a world in which capabilities advance at the pace of exponential hardware curves. Reality, particularly in insurance, is more likely to be slower paced. Any major advance in automation will require technical stability, social negotiation, and regulatory consultation. Change, while inevitable, plays out over cycles defined not by technology, but by habit, legacy systems, and regulatory appetite. Nonetheless, the direction of travel is clear. As agents will take on more responsibilities, the opportunity for actuaries is not to outpace the machine, but to learn how to drive it.

To position themselves at the forefront of the profession, actuaries should adopt the following pragmatic approaches to embrace AI in their career and work processes:

Inventory and Automate: Catalogue which of your current tasks are fully defined and rules-based. These are prime for agentic automation; invest time in learning how to specify, test, and audit the handover.

Define Judgement Boundaries: Identify points where ambiguity or open-ended reasoning is central. Ensure clear rationales are documented for why and how human review is maintained.

Develop Prompt Fluency: Treat interaction with agents as a new professional language, incorporating best practice from prompt engineering and scenario design.

Embed Governance Early: Participate in the drafting of process and governance charters that anticipate regulatory scrutiny. Insist that logs, decision audits, and version control are routine, not afterthoughts.

Cultivate Interdisciplinary Curiosity: The actuary who can discuss cyber risk, emerging health trends, and capital modelling, and translate each into clear instructions for agents, will retain their advantage long after foundational exams become rote for machines.

Institutional Engagement: Be active in shaping professional standards around automation, agent audit, and skills certification, ensuring the future of the discipline is credible and adaptive.

7. Closing Remarks

This next chapter for the actuary is not a break from the past, but a return to the profession’s roots. An actuary’s worth will now be measured less by their stored knowledge and more by their process of critical thinking and discernment. Intelligence is becoming a cheap commodity, yet this only increases the value of those who can question, frame, and direct it. As intellectual labour becomes commoditised, the bedrock skills of professional judgment, ethical oversight, and the ability to communicate uncertainty; are no longer just part of the actuary’s job; they are the job.

References

Kokotajlo, D., Alexander, S., Larsen, T., Lifland, E., Dean, R. (2025). AI 2027: Forecasts and Scenarios. https://ai-2027.com/

Kosmyna, N., Hauptmann, E., Yuan, Y. T., Situ, J., Liao, X. H., Beresnitzky, A. V., Braunstein, I., & Maes, P. (2025). Your Brain on ChatGPT: Accumulation of Cognitive Debt when Using an AI Assistant for Essay Writing Task. arXiv:2506.08872. https://arxiv.org/abs/2506.08872